This is a website for an H2020 project which concluded in 2019 and established the core elements of EOSC. The project's results now live further in www.eosc-portal.eu and www.egi.eu

This is a website for an H2020 project which concluded in 2019 and established the core elements of EOSC. The project's results now live further in www.eosc-portal.eu and www.egi.eu

Click here to return to the main EOSC-hub Week 2020 page

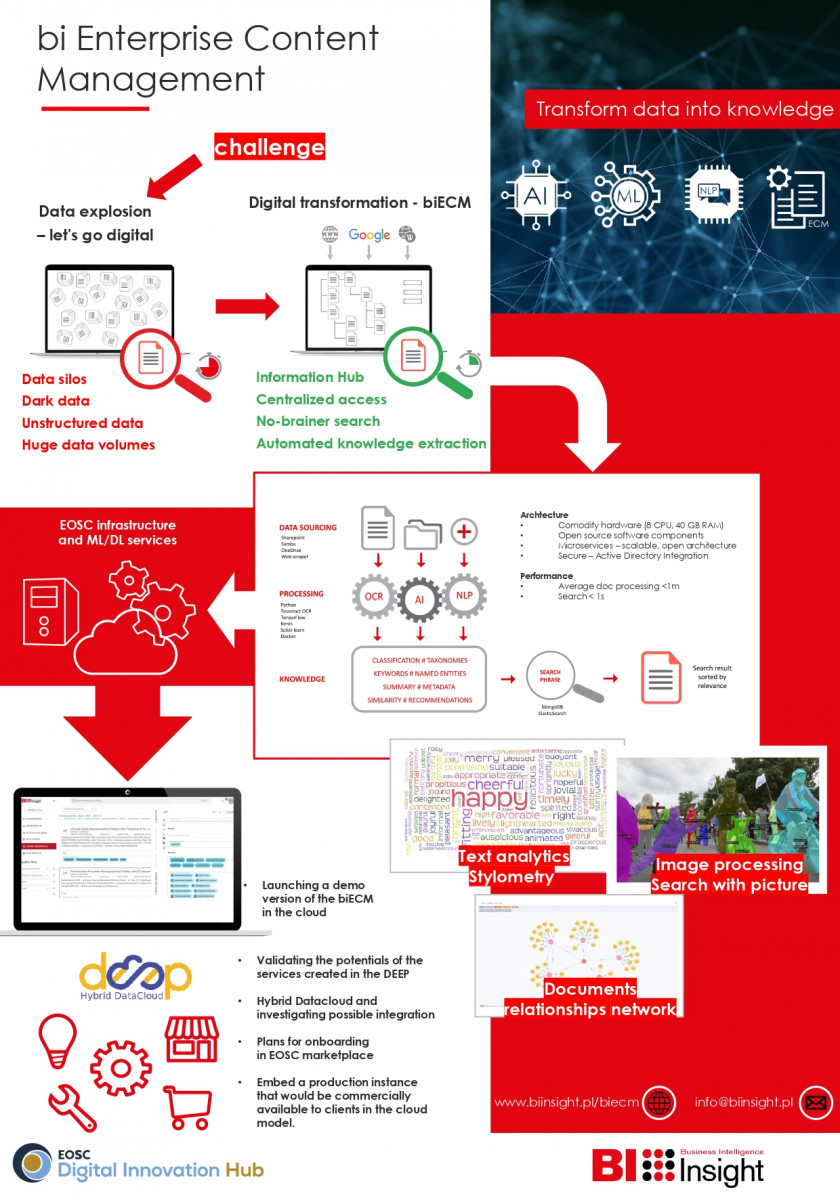

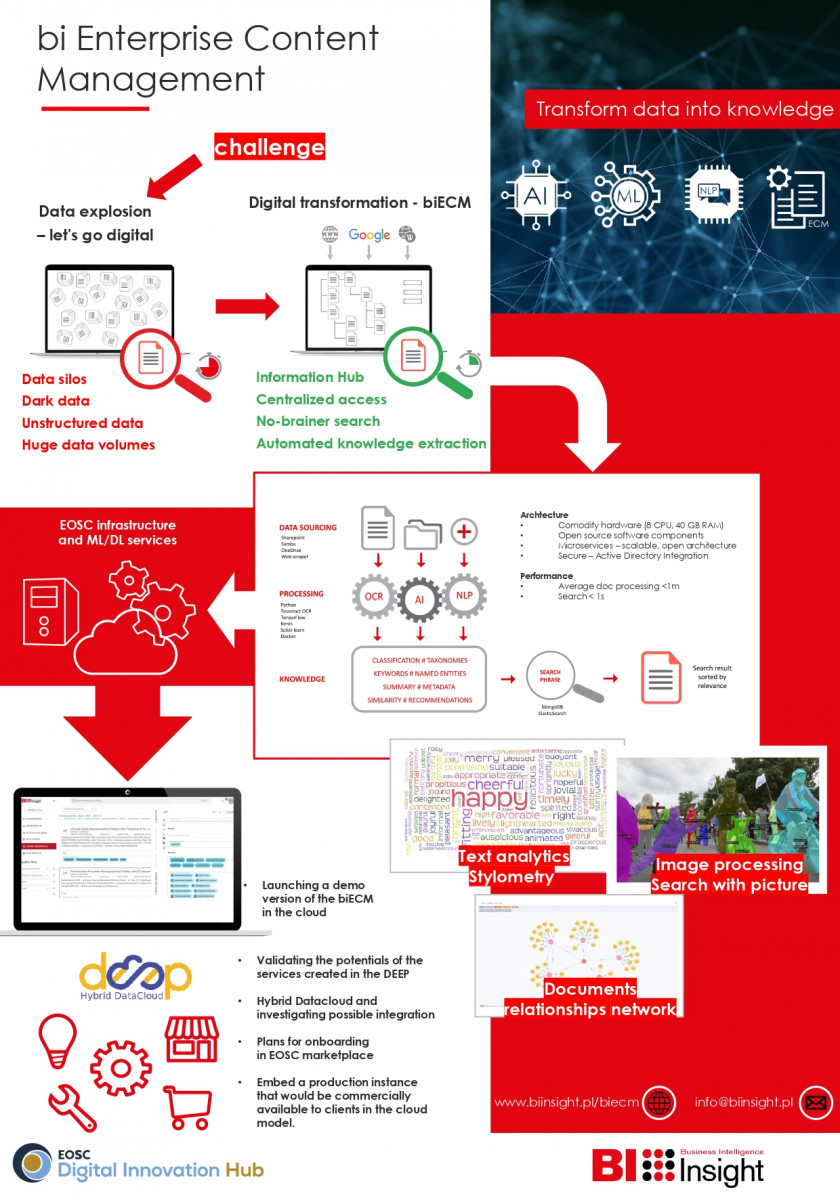

The Best Poster is: Poster #1 bi Enterprise Content Management by Grzegorz Niemiec, Magdalena Makowska, Małgorzata Filipek, Mateusz Romaniuk, Piotr Piórkowski, Dorota Kot, EOSC DIH.

The best poster was selected according to the following criteria:

See the rest of the entries below:

This poster shows first early results of one the EOSC DIH Business Pilot: BI Insight - Business Intelligence, Artificial Intelligence and Big Data technologies

biECM is a system enabling users to access the knowledge contained in files: presentations text documents, sheets and others. The system utilizes Natural Language Processing and Machine Learning algorithms in creating recommendations, document classification, information retrieval (both from text and images embedded in documents), as well as building summaries, taking into account all language-specific variations.

The growing resources of all kinds of electronic documents in every organization especially in large organizations, government institutions and administration, lead to the search for effective methods of working with such documents, their quick search, classification and full use of the information contained therein. To meet the challenge of improving work in the area of sharing knowledge collected in the organization, we designed and implemented a solution using mechanisms of artificial intelligence and natural language processing.

A user-friendly search engine using artificial intelligence mechanisms allows you to accelerate the process of obtaining information and optimize work in the organization, increasing personal productivity and efficiency of information circulation processes. The use of these features in scientific and academic environments can significantly contribute to accelerating the development of science, innovation, and discovery.

Poster will help you to see what the idea of the system's creators was. Outline of the problem, challenge, mechanism of action, technical capabilities, and type of support for EOSC and DEEP HybridDatacloud.

Thanks to cooperation with EOSC DIH and DEEP HybridDatacloud offering cloud infrastructure and ML/DL services and integration support, we can launch a biECM demonstration version in the cloud: a test version for users who would like to know the functionality of the system.

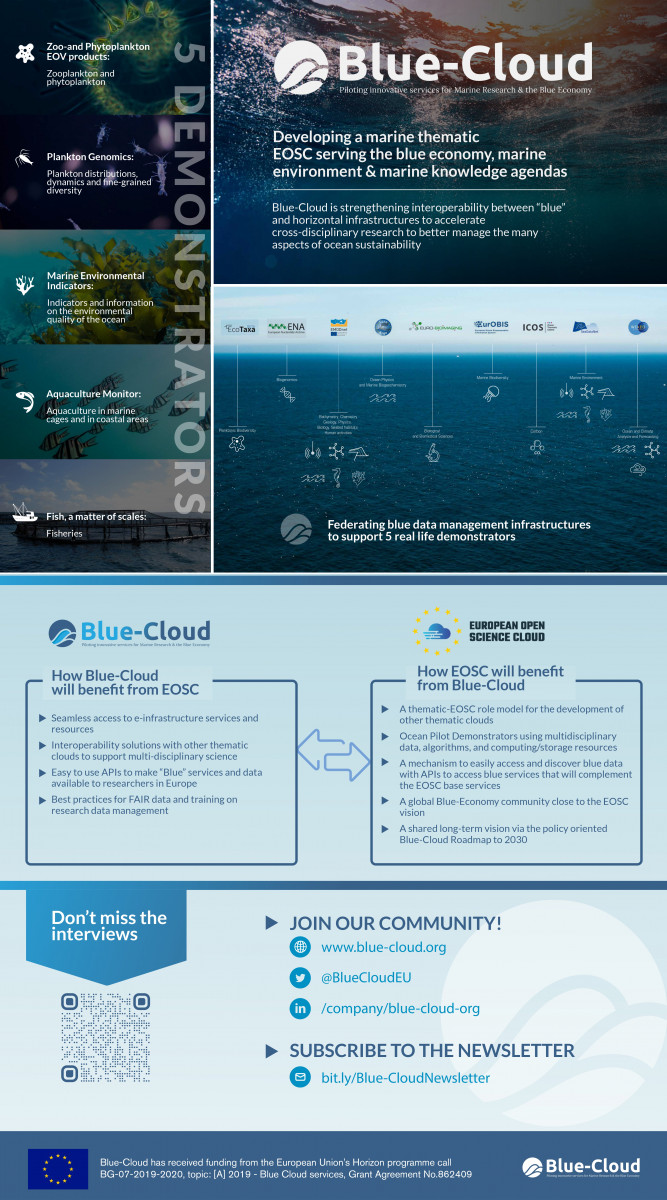

Blue-Cloud is a European H2020 project aiming to federate and pilot innovative services for Marine Research & the Blue Economy. It will develop a marine thematic cloud for EOSC to explore and demonstrate the potential of cloud based open science for better understanding and managing the many aspects of sea and ocean sustainability. At the opening of the All Atlantic Ocean Research Forum (6-7 February 2020), Mariya Gabriel, the European Commissioner for innovation, research, education and youth, highlighted Blue-Cloud a key instrument for the sustainable ocean strategy.

Blue-Cloud is the flagship project of the DG RTD Unit of the European Commission that will link the horizontal e-infrastructures supported by DG CNECT (e.g. EUDAT & D4Science) and DG GROW (e.g. Copernicus DIAS), long-term marine data initiative supported by DG MARE (e.g. EMODnet), research infrastructures supported by DG RTD and other recently funded thematic clouds (e.g. Food Cloud and Transport Cloud). It federates leading European blue data management infrastructures (SeaDataNet4, EurOBIS5, Euro-Argo, Argo GDAC, ELIXIR-ENA, EuroBioImaging, CMEMS, C3S, and ICOS-Marine), and horizontal e-infrastructures to capitalise on what already exists and to deliver the “Blue Cloud” framework.

The project holds great potential to deliver societal solutions via the implementation of five innovative demonstrators (www.blue-cloud.org/demonstrators), covering specific domains such as biodiversity and genomics, environment, fisheries and aquaculture. It is aware of the high importance of EOSC and data as a key resource for innovation and is working towards the establishment of a thematic marine EOSC serving the Blue Economy.

Through a smart federation of data resources, computing facilities, and analytical tools Blue-Cloud aims to provide researchers with access to: 1) Blue multi-disciplinary data from observations, in-situ and remote sensing, data products and outputs of numerical models. 2) A blue Virtual Research Environment (VRE) with various services to support its users in undertaking world class science.

What Blue-Cloud brings to EOSC?

- A pilot thematic-EOSC as a role model for the development of other thematic clouds, with FAIR access to multidisciplinary data, analytical tools and computing and storage facilities that support multiple scientific research challenges

- Services through pilot Demonstrators for oceans, seas and fresh water bodies for ecosystems research, conservation, forecasting and innovation in the Blue Economy.

- A mechanism to easily access and discover blue data, with APIs to access blue services that will complement EOSC base services providing blue thematic functionalities.

- Examples on how a framework like Blue-Cloud can address one or several of the policy challenges defined in many programmes, namely Bioeconomy Strategy, the Circular Economy Strategy, the Blue Growth Strategy, the Common Fisheries Policy, the Maritime Spatial Planning Directive and the International Ocean Governance Communication.

- A Global Blue-Economy community close to the EOSC vision, including the marine and maritime industry.

- The opportunity of bringing EOSC in the Blue Economy long-term vision via the policy oriented Blue Cloud Roadmap to 2030 which seeks a series of EU Calls for further development and uptake of the Blue Cloud by multiple VRE applications and connecting additional marine data infrastructures.

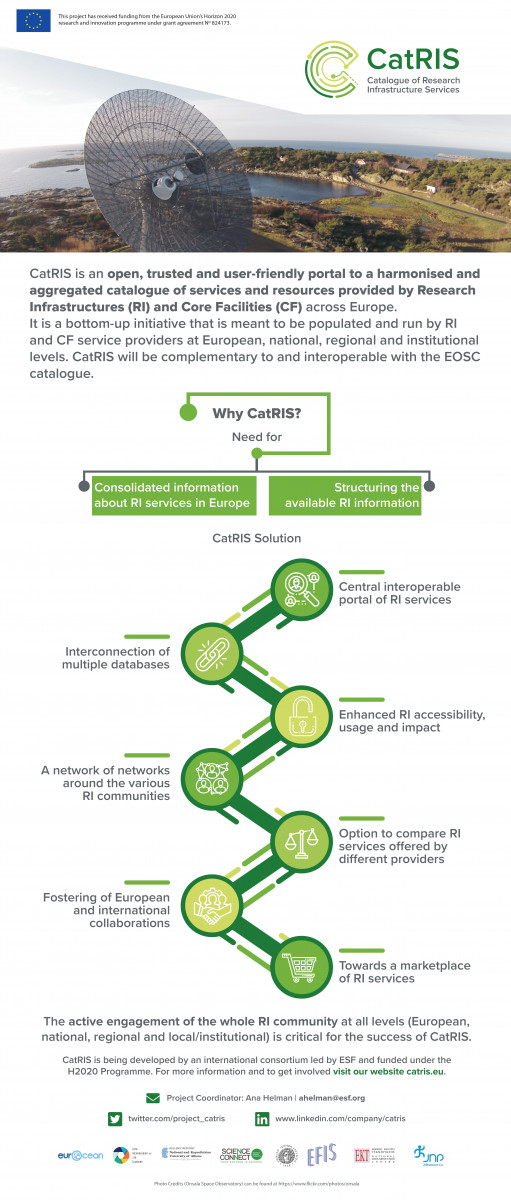

The European Research Infrastructures landscape is diverse and includes Research Infrastructure (RI) operators, managers, academic and industrial users, decision and policymakers, funders and other resource providers. Significant efforts have been directed towards gaining insight into available RIs, national RI road-mapping practices, and planning of pan-European RI. A recent report by OECD Global Science Forum highlights that “open digital platforms can have substantial value for a wide range of stakeholders”. Information on the services and/or resources provided by RIs is currently provided in many different formats through websites and portals which are not necessarily always connected. In line with the ongoing development of a catalogue of e-infrastructures services and the needs of the broad RI community, CatRIS project is developing a catalogue of European RI services focusing on physical infrastructures, core facilities (CR) and shared scientific resources (SSR). CatRIS catalogue aims to be the central gateway for gathering, harmonizing and making findable and accessible these RI services from all around Europe, thus contributing to the core objectives of the European Open Science Cloud for open access to and reuse of scientific resources and services.

The catalogue is being developed as a user-tailored portal, designed for user needs and beneficial for service providers; it is a bottom-up initiative that is meant to be populated and run directly by RI, CF and SSR service providers. CatRIS will support the scientific community providing several benefits to each of the targeted stakeholder categories, which are RIs service provider, researchers and service users from academia and industry, policymakers and funders.

CatRIS catalogue is now operational and the project team is busy with the first service providers onboarding phase. Stakeholder engagement has a key role in the achievements and success of CatRIS project aims.

CatRIS project started on January 2019 and it is being developed by an international consortium led by ESF and funded under the H2020 Programme.

Identifiers.org has established itself as a stable system for identification and citation of life science data, using Persistent Identifiers (PIDs). Identifiers.org not only enables researchers to easily reference their data, but also provides a variety of useful, supporting web services.

We have released a brand new cloud native modern identifiers.org platform, focused on its main mission, resolution of compact identifiers. This new platform brings more robust and reliable resolution services, new extended APIs for accessing and working with the registry, as well as the possibility for our users to deploy their own identifiers.org services in sync with our registry, on premises, hybrid cloud and multi-cloud environments.

Research data management (RDM) has become a vital element to advance open science via data sharing and reuse. To improve RDM, research organisations (research funders, councils, universities, academies, institutes, etc.) increasingly require researchers to develop a Data Management Plan (DMP) for their project proposals or for research evaluations. While researchers and research projects usually recognise the benefits of better RDM, describing your standards, repositories, and data-policies in a DMP, most of the times in a non-committal way, is often considered a burden. Domain Data Protocols (DDP) are intended to make life easier for researchers as well as for research organisations demanding DMPs. They essentially give guidelines or directives for data management for a particular domain, agreed upon by a research community and/or the organisations demanding a DMP. The fact that communities collectively set the standards and guidelines for DMPs also means that there are great opportunities to increase the FAIRness of research data in these respective communities. Within EOSC-hub and the EOSC community at large, the use of Domain Data Protocols in Data Management Plans will boost the developments around FAIR data.

The Distributed Computer is a framework for distributing computational workloads to underutilized computers and infrastructure, and features a credit-based system for metering computing resources consumption (such as CPU, GPU, memory, or I/O). While distributed (or cloud) computing is not entirely new, this model is unique. In the traditional cloud model, centralized data centers rent resources to customers. Put simply, it’s a one-sided marketplace. However, with the Distributed Computer, any user can both buy and sell computing resources – it’s a two-sided marketplace, similar to a smart electrical grid in which customers can sell excess electricity produced from solar panels. Computing networks supported by the Distributed Computer can be private and on-premises (such as a University harnessing thousands of computers it already owns), or publicly accessible (like the SETI@Home model in which millions of home desktops connect to a grid) in accordance with customer needs. Additionally, complex or sensitive workloads can be directed for execution at secure and high-performance sites (such as EGI or CENGN data centers). The Distributed Computer leverages existing network infrastructure and 5G-ready through a partnership with ENQOR5G.

A key challenge for FAIRsFAIR is to ensure project activities dovetail with work carried out by the EOSC Governance Working Groups, and feed into and complement work being done by other projects in the research data and FAIR space. FAIRsFAIR is looking in particular at four strategic assets:

DATA PRACTICES

In collaboration with established experts, FAIRsFAIR will document and further develop data practice and repository design to dramatically enhance data interoperability across scientific domains and geographic divides. A first set of Recommendations has already been released specifically on FAIR Semantics (D2.2 - https://zenodo.org/record/3707985#.XrQTqKgzaM8) and for Persistence and Interoperability (D2.1 - https://zenodo.org/record/3557381#.XrQULKgzaM8) together with reports on FAIR Data Practice Analysis (D3.2 - https://zenodo.org/record/3581353#.XrQUeagzaM8) and the Features of FAIR Data Repositories (D2.3 - https://zenodo.org/record/3631528#.XrQU3qgzaM8).

DATA POLICY

Based on a thorough review of the current policy landscape, FAIRsFAIR will make concrete recommendations to enhance FAIR policy and embed FAIR culture in institutes of higher learning. In this regard, a FAIR Policy Landscape Analysis report has been published (D3.1 - https://zenodo.org/record/3558173#.XrQWB6gzaM8), followed by Recommendations for Policy Enhancements (D3.3 - https://zenodo.org/record/3686901#.XrQWWqgzaM8). A report on FAIR in European Higher Education (D7.1 - https://zenodo.org/record/3629683#.XrQWvagzaM8) has also been released.

FAIR COMPETENCE CENTRE

FAIRsFAIR will establish an educational programme to equip researchers, trainers and institutes of higher learning across Europe with the information and skills required to produce and curate FAIR data. In this regard, an Overview of Needs for Competence Centres (D6.1 - https://zenodo.org/record/3549791#.XrQXSqgzaM8) and a preliminary definition of the FAIRsFAIR Core Competence Centre (D6.2 - https://zenodo.org/record/3732889#.XrQXyqgzaM8) have been published.

FAIR CERTIFICATION

FAIRsFAIR will establish a FAIR assessment tool and, by actively supporting selected repositories to achieve certification, create a European network of certified repositories. To this end, a Vision for the Components of a FAIR Ecosystem have been documented (https://zenodo.org/record/3565428#.XrQZMqgzaM8) alongside Draft Recommendations on Requirements for FAIR Datasets in Certified Repositories (D4.1 https://zenodo.org/record/3678716#.XrQZ26gzaM8).

For this reason the FAIRsFAIR project set up the Synchronisation Force, a team tasked with establishing a dialogue among the various projects and actors in both the EOSC and FAIR ecosystems whose work touches on FAIR. Its mandate is to maximise coordination and minimise unnecessary overlap or duplication, facilitate synergies between project activities, and EOSC governance, and promote collaboration mechanisms aimed at turning FAIR into reality.

EOSC Portal is serving as one of the many entry points in the overall EOSC ecosystem; other entry points including e-Infrastructures, regional and thematic portals as well as project-oriented catalogues of services and resources will offer the ability to users to discover, explore, access and use resources belonging to EOSC offerings.

The EOSC Portal has been conceived to provide a European delivery channel bridging the demand-side and the supply-side

of the EOSC and all its stakeholders.

In 2019, EOSC Enhance was funded by the European Commission to increase the demand and widen the user base of the EOSC Portal by developing and improving its functionality. The EOSC Portal forms part of the Access and Interface action line of the EOSC implementation roadmap.

This poster provides a short outline of selected EOSC-hub Key Exploitable Results (KERs) that are already in continuous, operational use and broadly adopted as mechanisms that support the federated service provision. All three highlighted KERs are primarily relevant for the architecture group and due to their general nature relevant to a number of stakeholder groups. The KERs presented in this poster are as follows:

1. EOSC Portal and Marketplace - Contributes to Architecture

EOSC Portal website was created by a collaboration between EOSC-hub, OpenAIRE and partners from the former eInfraCentral project. It serves Service Providers, Researchers and Research Communities and Enterprise stakeholders and supports federated service ecosystem (service discovery and access to market).

2. Service Management System (SMS) - Contributes to Architecture and Rules of Participation

SMS comprises all activities by service providers to plan, deliver, operate and control services offered to customers. SMS is a critical in integrating different providers into the common marketplace and monitoring frameworks in a way that provides value for EOSC.

3. Internal Services in the Hub Portfolio - Contributes to Architecture

This KER provides a common toolset for integrating services to EOSC ecosystem. A mature implementation will both streamline the processes of the Hub operators and reduce the integration efforts needed by the service providers.

The poster provides also some background information about the project and the overall set of KERS of the project

EOSC-Pillar coordinates national Open Science efforts across Austria, Belgium, France, Germany and Italy, and ensures their contribution and readiness for the implementation of the European Open Science Cloud (EOSC).

EOSC-Pillar is part of EOSC regional projects funded under the INFRAEOSC 5b call and will work in sync with other regional projects as they coordinate the efforts of the national and thematic initiatives in making a coherent contribution to EOSC. While the project involves only five countries in Europe, the aim is to demonstrate that a localised approach to the implementation of the EOSC through a science-driven method, is efficient, scalable and sustainable, and can be effectively rolled out in other countries.

National initiatives are the key of EOSC-Pillar’s strategy for their capacity to attract and coordinate many elements of the complex EOSC ecosystem and for their sustainability, adding resilience. By federating them through common policies, FAIR services, shared standards, and technical choices, EOSC-Pillar will be a catalyst for science-driven, transnational, open data and open science services offered through the EOSC Portal.

Among its key results include (1) LANDSCAPING: A comprehensive understanding on the state of open research data and service across central Europe through an extensive survey and its analysis, (2) SERVICES: New services integrated into EOSC from national initiatives and its Open Call, tested through use cases as well as tools and training for the promotion of FAIR culture, and (3) SUSTAINABILITY: Policy and legal framework as well as business models that wil allow the sustainability of EOSC-Pillar services even after the project's conclusion.

European Science Cluster of Astronomy & Particle physics ESFRI research infrastructures (ESCAPE) addresses the Open Science challenges shared by ESFRI facilities (CTA, ELT, EST, FAIR, HL-LHC KM3NeT and SKA) as well as other pan-European research infrastructures (CERN, ESO, EGO, JIVE and LSST) in astronomy and particle physics.

The ESCAPE work programme provides a multi probe and cross-domain ESCAPE EOSC Cell for the FAIRness of data and software interoperability with following well-defined objectives:

• Implementing Science Analysis Platforms for EOSC researchers to stage data collections, analyse them, access ESFRIs’ software tools, bring their own custom workflows.

• Contributing to the EOSC global resources federation through a Data-Lake concept implementation to manage extremely large data volumes at the multi-Exabyte level.

• Supporting “scientific software” as a major component of ESFRI data to be preserved and accessed in EOSC through dedicated Open Source Scientific software and service repository.

• Implementing a community foundation approach for continuous software shared development and training new generation researchers.

• Extending the Virtual Observatory standards and methods according to FAIR principles to a larger scientific context; demonstrating EOSC capacity to include existing frameworks.

• Involving science inclined public in knowledge discovery through a harmonised suite of citizen science experiments.

This ESCAPE EOSC Cell will connect ESFRI projects to EOSC ensuring integration of data and tools, to foster common approaches to implement open-data stewardship and to establish interoperability within EOSC as an integrated multi-probe facility for fundamental science.

The ESCAPE EOSC cell will improve the engagement of an active and diversified researcher community by supporting some of the test science projects such as Dark Matter Initiatives to achieve dark-matter scientific goals and related community building.

Citizen science is one of the eight priorities of the European Open Science Agenda, together with the creation of the European Open Science Cloud (EOSC) (European Comission, 2019). Within this context, the European Union has promoted Cos4Cloud (Co-designed Citizen Observatories Services

for the European Open Science Cloud), a project to boost citizen science technologies and integrate them to the digital service ecosystem of EOSC, in order to ensure the viability in the long term of citizen science platforms -also known as citizen observatories- and help them reach a global scope.

The set of services that starts up EOSC’s ecosystem is called Minimum Viable Ecosystem (MVE). The MVE for citizen observatories (COs) proposed by Cos4Cloud consists on co-designed and prototyped set of services focused on interoperability, innovative models of collaboration and architecture for federated infrastructures, so that all citizen observatories and their components are able to interact and establish synergies among them.

The citizen science infrastructures in Europe take the name of Citizen Observatories (COs), characterized by their focus on observing the environment (rather than other phenomena), the scale of their activities (typically local), and their timeline (typically long term). Currently, there are dozens of citizen observatories in Europe. The growing evolution of these COs yields in a community of citizen science that is continuously expanding not only in Europe but also on a global scale (Gold, M., 2018).

This, in turn, represents large-scale challenges for citizen observatories who must facilitate: Efficient capture, identification and validation of data.

Interaction between participants based on a model that allows the transfer of knowledge and, the stewardship and storage of large volumes of data in different formats (photos, sounds, camera trap images...). Interoperability at a local, regional, and global level that allows scaling the impact of the data overcoming the geographic, thematic, or even linguistic barriers for doing research. Long-term sustainability challenges with difficulty in obtaining resources to develop functionalities based on cutting-edge technologies.

The services developed by Cos4Cloud will address these COs’ challenges. During the project, co-design activities will also be carried out with key stakeholders to discuss and improve the proposed services according to citizen science needs. In addition, in order to assess the services, 9 citizen science platforms focused on biodiversity and environmental monitoring participate in Cos4Cloud and will test the technological services

with their users (Cos4Cloud Consortium, 2019).

Citizen science and citizen observatories in EOSC

In order to reach a global scope, there is a need to integrate Cos4Cloud’s innovative citizen science services in EOSC make them available to the entire scientific community in the EOSC digital ecosystem. The services will be presented as modules, so that existing citizen science observatory will be able to choose and install the technological services it needs to improve its functionalitie.

References

European Commision (2018). Prompting an EOSC in practice: Final report and recommendations of the Commission 2nd High Level Expert Group [2017-2018] on the European Open Science Cloud (EOSC). Brussels. https://doi.org/10.2777/112658

Gold, M. (2018). We Observe: An Ecosystem of Citizen Observatories for Environmental Monitoring. D2.1 EU Citizen Observatories Landscape Report - Frameworks for mapping existing CO initiatives and their relevant communities and interactions.

Cos4Cloud Consortium. (2019) Co-designed citizen observatories services for the European Open Science Cloud Grant Agreement No. 863463.

Typically HPC environments are characterized by software and hardware stacks optimized for maximum performance at the cost of flexibility in terms of OS, system software and hardware configuration. This close-to-metal approach creates a steep learning curve for new users and makes external services, especially cloud-oriented, hard to cooperate.

The DEEP-Hybrid-DataCloud project aims at developing a distributed architecture to leverage intensive computing techniques for deep learning. In the framework of this project, we have been investigating how to integrate existing production HPC environments with higher level services (PaaS orchestration).

The abstraction offered by our solution simplifies the interaction for end users thanks to the following key features:

- Standard interfaces are used to manage different workloads and environments, both cloud and HPC-based: the TOSCA language is used to model the jobs and the PaaS Orchestrator creates a single point of access for the submission of the processing requests.

- A REST API gateway (QCG Computing) implements the bridge between the HPC batch cluster and the PaaS services providing the interfaces to submit and monitor the jobs from outside the HPC site.

- A unified AAI is adopted throughout the whole stack, from the PaaS to the data and compute layer: it is implemented by the INDIGO IAM service that provides federated authentication based on OpenID Connect/OAuth20 mechanisms.

- The execution of containerized applications accessing specialized hardware (i.e. GPUs and infiniband) is simplified using a powerful tool like udocker.

- The data management and sharing is facilitated by the usage of a distributed storage system (Onedata).

The Open Clouds for Research Environments project (OCRE), aims to accelerate cloud adoption in the European research community, by bringing together cloud providers, Earth Observation (EO) organisations, companies and the research and education community. This will be achieved through ready-to-use service agreements and €9.5 million in adoption funding facilitated through cloud vouchers.

Among the key outputs of OCRE include (1) COMMERCIAL CLOUD & EARTH OBSERVATION SERVICES: Through the OCRE Tender, OCRE will source commercial SaaS, PaaS and IaaS and digital Earth Observation services demanded by researchers, (2) LEGAL & TECHNICAL MECHAMISMS: OCRE will propose legal and technical mechanisms to integrate these supplementary commercial services into the EOSC, and (3) BUSINESS MANAGEMENT PLATFORM: OCRE will develop a Business Management Platform to track services/resource consumption or usage.

Overall, OCRE provides a number of advantages for both the demand and supply sides of future EOSC commercial services.

From the demand side, through OCRE, research institutions will be able to take advantage of innovative commercial services as the process will be more streamlined. Less time is needed to discover and acquire the services they need from the market. Researchers themselves, by benefiting from vouchers and increasing their uptake of commercial services from diverse providers, will enjoy better tools to carry out their work.

On the supply side, for commercial cloud service providers, the legal, financial, and technical compliance barriers will be minimised. OCRE will make market requirements easier to understand, allowing them to tailor their offering to the research community. Specifically for earth observation SMEs, they will be introduced into the "marketplaces" used by research communities to procure services. This opens up new opportunities and provides them with a better view of the market for their niche solutions.

SKA is an international project to build the largest and most sensitive radio telescope ever conceived. A worldwide distributed network of SKA Regional Centres (SRCs) will host and provide access to the SKA data, to the analysis tools and processing power. The SRCs will be at the core of the exploitation of SKA data, being the place where the science will be done. The Institute of Astrophysics of Andalusia-CSIC (IAA-CSIC) is both developing an SRC that will be aligned with the Open Science Principles and contributing to build EOSC through our participation in the H2020 ESCAPE project and the Spanish Thematic Network in Open e-Science.

In this poster, we show our work to make an astrophysics paper reproducible (Jones et al. (2019)) using existing technologies and tools such as git/GitHub, Conda, Docker, Jupyter notebooks and EOSC resources (the main repository for the project is at https://github.com/AMIGA-IAA/hcg-16). EOSC played a central role in the progress of our work by enabling convenient access to compute (EGI fedcloud) and data (EUDAT B2SHARE) resources, forming a collaborative environment in which to develop and test software for the project. We will share our experience with EOSC resources from the end user point of view so other researchers can identify how to benefit from EOSC. This work represents a step forward to bring Open Science into the SRCs and to pave the way in which astronomers and scientists approach the challenge of reproducible science.

Observing the ocean is challenging: missions at sea are costly, different scales of processes interact, and the conditions are constantly changing. This is why scientists say that "a measurement not made today is lost forever". For these reasons, it is fundamental to properly store both the data and metadata, so that access to them can be guaranteed for the widest community, in line with the FAIR principles: Findable, Accessible, Interoperable and Reusable.

Long-term data archiving procedures have been specified in the PHIDIAS use case in Ocean data testing and rely on the HPC (high-performance computing) and HPDA (high-performance data analytics) expertise of the PHIDIAS partners, aimed at improving the usage of cloud services for marine data management, data services to the user in a FAIR perspective, and data processing on demand.

The PHIDIAS ocean use case mission is to foster the improvement of long-term stewardship of marine in-situ data and data storage for services to users and marine data processing workflows for the on-demand processing. The data testing is led by IFREMER, together with Europe's leading research groups in ocean studies, such as Université de Liège, MARIS, CNRS, CSC, Finnish Environment Institute, in the coordination of CINES, the leading HPC centre in France, with support from other PHIDIAS partners that are actively engaged in this initiative.

This poster will share the current value-added activities, strategies and future plans of the PHIDIAS’ ocean use case. It will showcase the benefits of a strategic and collaborative approach to continuing to boost cloud services for marine data management, services and processing end-users (including scientific communities, Public authorities, private players and citizen scientists).

Pidforum.org is a global platform for anything and everything related to persistent identifiers (PIDs). PIDs play a crucial role in making data FAIR and providing sustainable services within the EOSC infrastructure. The PID Forum is meant to be a place for people working on the FAIR data infrastructure to exchange, connect and collaborate.

This poster introduces the PID Forum and its possibilities to the EOSC-Hub community. The PID forum was set-up by the FREYA project (https://www.project-freya.eu/en) in 2019 as a community space to discuss,exchange and engage about PID-related matters.

The Forum includes different sections dedicated to specific categories, including PID best practices, events, news as well as a PID Graph category for the graphs that are developed in different projects like FREYA or OpenAIRE-Advance. The PID Forum also hosts a category on the EOSC PID policy that was developed by representatives of the EOSC FAIR Working Group and EOSC Architecture Working Group. Moreover, the PID Forum also includes a Knowledge Hub that provides learning materials and educational resources on PIDs, which members recently started to translate into multiple languages.

The PID Forum also offers the possibility to host private spaces for exchange between specific PID communities, like FREYA ambassadors, or the PIDapalooza organisation team.

The PID Forum was set up as a community platform and this poster would like to raise awareness and invite the EOSC-Hub community to join and contribute.

See you all on pidforum.org!

Policy cloud services will turn illegible data into a readable format through innovative analytical tools. Using this now readable data our policy orientated modelling tools inform policy in the way public administration or business needs to.

Our tools come with built in privacy and security for sensitive data. This is part of six main services that have been designed to take vast amounts of raw data, clean it, sort it, and adapt it to a policy making scenario.

These Policy Cloud service will be delivered through the EGI federated cloud through EOSC.

Policy Cloud will create a major change in how we use data. Public participation through crowdsourcing of data will become far more streamlined, easy and ethically positive. It will enlarge the evidence base for effective policy, making it more predictable. As well as facilitating interoperability through reusable tools.

These are not just plans and hopes but are ideas that are actually happening now. We have four pilot cases that are implementing all of the aspects of Policy Cloud. Based in Bulgaria, Italy, Spain and UK they range from fighting radicalisation online to creating a more sustainable agriculture environment. The list of interesting uses goes on and you can find out more information, including interviews, articles and graphics on our newly revamped website. So, join our community, the exciting journey has only just begun.

The integration of widely used frameworks for the management and processing of data typically faces the complexity of managing multiple credentials for different services. Moreover, the use of containerised infrastructures usually poses some difficulties in the management of the multitenancy.

We have implemented an architecture that leverages EGI Check-in and VO Groups to seamlessly manage the authentication, authorisation and isolation of a Data processing deployment that includes Kubernetes as processing back-end and OneData to manage the distributed storage.

Users are authenticated using any of the IdPs supported by the EGI Check-in system. Users are enrolled in a specific VO which provides the right to access to the resources of the collaboration.

- The Kubernetes endpoint is preceded by an nginx service which captures the requests. Unauthenticated users are redirected to the EGI Check-in which performed the process of authentication. When nginx receives the user credentials, requests are redirected to a module called kube-authorizer that checks if this is the first time a user logs into the Kubernetes platform, creating its namespace, service account, access token, role bindings, and configmaps automatically.

- The Storage backend is implemented through a Persistent Volume synchronized with a second provider through OneData. Containers mount the filesystem using OneClient, which uses fuse to mount the volumes on the container space. The authentication is performed through tokens that are automatically created when the user logs in the system.

The Spanish Thematic Network in the field of Open e-Science (REEC) is an inclusive initiative that combines efforts, shares information and aims to strengthen the position of Spanish institutions within the framework of the European Open Science Cloud (EOSC).

The Network is an instrument funded by the Ministry of Science and Innovation in the 2018 call for Dynamization Actions - Research Networks with code RED2018-102377-T, composed by relevant actors in the areas of technological knowledge, research infrastructure providers, scientific communities, as well as those responsible for defining and implementing national policies that encourage the adoption of the EOSC by the Spanish scientific community following the Roadmap agreed by the European Commission (goo.gl/DRJHUP).

This network is built on top of the results of a set of national and European projects in the field of open e-Science and seeks to coordinate, share and reinforce these results in an international context.

The general objectives of the network are:

- To promote communication between network groups and related external actors, sharing the experience and activities they are developing within the framework of the EOSC.

- To promote Research, Development and Innovation activities and contribute to the advancement of knowledge, spreading the concept of EOSC and promoting the involvement of Spanish actors in open science activities both nationally and internationally, as well as promoting user access (researchers) Spanish to EOSC and international researchers to Spanish EOSC services.

- To face the challenges of Spanish research at the international and national level, strengthening Spanish participation in the EOSC by increasing its presence in forums, resources and catalogues of services and promoting that national and regional funding agencies facilitate researchers' access to services EOSC.

- To generate a sustainability plan and business opportunities.

The Research Data Alliance provides a unique international forum in which data standards, sharing agreements, best practices, tools and infrastructure can be worked on collectively across geographic and disciplinary boundaries. RDA and EOSC share a common vision about reducing barriers to research data sharing, and within the EOSC context, the RDA has been identified as a key vehicle in implementing the EOSC and coordinating international activity within the EOSC workplan.

Members of the RDA community are prominent in all three EOSC Governance bodies and RDA members are present in all EOSC Working Groups. In addition, many of the RDA Europe 4.0 national nodes are involved in EOSC projects, whether they be horizontal eInfrastructure initiatives such as EOSC-hub, OpenAIRE, EOSC Nordic, EOSC-Pillar and NI4OS or disciplinary and thematic initiatives such as SSHOC, ESCAPE and FAIRsFAIR.

A survey by RDA Europe 4.0 found that EOSC projects are actively engaged with RDA in numerous ways. For example, several projects are implementing recommendations from specific RDA Working Groups while others use the RDA community as a way to get feedback on their outputs and build their international network of researchers and data practitioners. In several cases, EOSC projects have been involved in the formation of RDA groups to develop community-endorsed technical solutions for a particular data challenge. RDA groups of particular significance to EOSC projects include the FAIR Data Maturity Model WG, the Research Data Repository Interoperability WG, and the Active Data Management Plans IG.

This poster presents highlights of how RDA is integrated with EOSC, including key benefits, challenges and ongoing collaborations. Based on data collected from EOSC projects, it gives an effective picture of how RDA plays an important role in strengthening European engagement in EOSC and aligning this initiative with global activity.

Coastal margin observatories offer the flexibility to support both responses to emergencies and the long-term coastal management of coastal regions. However, existing coastal observatories for water quality are typically field data repositories, and model hindcast/forecast products are not frequently integrated with these data hubs. Herein, we propose and implement an innovative observatory, the UBEST coastal observatory. The UBEST observatory is an operational framework that provides integrated data-model approaches to reach the continuous surveillance of the water quality in coastal systems. In particular, this tool provides several flexible data-model services that can support both the application of the European Water Framework Directive and the emergency response in case of contamination events.

The UBEST observatory portal (http://portal-ubest.lnec.pt/) relies on a user-friendly web-portal that provides detailed information about the water conditions in a given coastal system and the associated services (data repository and WFS/WMS map server). Several layers of information, with different levels of aggregation are available: i) historical data, ii) real-time data from monitoring networks, iii) daily forecasts of physical and biogeochemical variables simulated with operational models, iv) simulations of scenarios of climate change and anthropogenic pressures, and v) indicators that summarize both the physical behavior and the water quality status of the systems. The applicability of the observatory is demonstrated at two coastal sites, the Tagus estuary and the Ria Formosa. The UBEST observatory is easily customizable, so other coastal systems can be easily added to the observatory portal.

The implementation of this concept of observatories poses several challenges, including the requirement of significant computational resources. High Performance Computing (HPC) is a powerful resource in this context that enables coastal observatories for water quality. In UBEST, HPC is used i) for high-resolution simulations of circulation and water quality forecasts and scenarios and ii) to provide computational power to process data and model results through predefined tasks or user requests at the web-portal.

Challenges still remain for a broad application of the UBEST observatory concept. One of such challenges is the availability of computational resources for the daily water quality predictions and indicators at the required fine spatial scales, which can be addressed through integration with high-performance or distributed computing environments such as the European Open Science Cloud (EOSC). Another challenge is the capacity for building up a multidisciplinary team of coastal scientists and IT experts to adapt UBEST for their system of interest. A potential solution may be the development of a UBEST e-service, where any user can interact with a web on-demand platform to build his/her own system. This concept has already been demonstrated for coastal hydrodynamic predictions in the EOSC-hub computational infrastructure (service OPENCoastS available at https://marketplace.eosc-portal.eu/services/opencoasts-portal).

The SSH Open Marketplace, developed in the context of the SSHOC project, is the only fully-integrated discovery portal which pools and harmonises the tools and services useful for the SSH research communities, offering a high quality and contextualised answer at every step of the research data life cycle.

The SSH Open Marketplace is based on three main pillars:

1. Integration with existing EOSC services:

The SSH Open Marketplace is fully integrated in the EOSC landscape, for example by using the EOSC Federating Core, especially the Federated Identity (AAI) services and the helpdesk.

- Harmonise views on common themes and foster contact with other organisations operating in the EOSC environment

- Integration of the marketplace with other EOSC catalogues or marketplaces

- SSHOC Data and Metadata Interoperability Hub

2. Fostering Open Science in the SSH domain

A researcher needs to perform a textual analysis on historical texts: the SSH Open Marketplace will not only offer software and services suitable for the task, but also tutorials explaining how to properly use the software, as well as academic articles and other resources.

- Discovery portal for the SSH domain

- Contextualisation to adopt and reuse the content of the SSH Open Marketplace, no matter if they are a piece of software, a dataset, a research workflow or scientific article

- Centered around the research scenarios of the SSH community in which training materials have a central role to play

3. Community-driven curation

The SSH Open Marketplace follows a community-driven approach in building SSH part of EOSC. It is a social infrastructure.

- Community-based curation

- Three curation pillars: automatic ingest and update of data sources; continuous curation of the information by the editorial team and -most important- contributions from its users

- Collaborative and user-centric enhancement of content and context

The alpha release of the SSH Open Marketplace is planned for June 2020

Acting as a collective of procurers, the ARCHIVER consortium aims to create an ecosystem for specialist ICT companies active in archiving and digital preservation, willing to introduce innovative services capable of supporting the expanding needs of research communities, under a common innovative procurement activity for the advanced stewardship of publicly funded data in Europe. These innovative services will be ready to be commercialized and will become part of the catalogue of the European Open Science Cloud, by December 2021 and therefore useable by the public research sector in Europe. These services, ensuring long-term preservation of scientific data and turning it FAIR, are among the five types foreseen on the EOSC Strategic Implementation Plan.

The ARCHIVER Tender was closed on 28 April. 15 consortia including 43 companies and organisations applied to the ARCHIVER Request for Tenders. The best bids will be selected to start the Design Phase for ARCHIVER data preservation service in June 2020

Service onboarding

The processes of "on-boarding" services into EOSC can provide a baseline for ensuring services are compliant with these conditions. As part of it contribution to EOSC, ARCHIVER foresees a set of “derived rules” for commercial services onboarding. They include:

- Technical rules: extensive field testing; “research data ready” archiving and preservation services

- Legal Compliance: GDPR as an opportunity; high quality digital services guaranteeing digital sovereignty; legal certainty for researchers how they can produce and use data

- Financial Transactions: Business models adapted to research needs, public procurement cycles & research grants

ARCHIVER & Industry

A number of SMEs today already offer SaaS data archiving and preservation services. However, it is not demonstrated yet if the existing SME offerings can match the demands of the public research sector preserving data volumes in the petabyte region and/or managing the complexities of large-scale scientific communities. ARCHIVER is engaging with SMEs, public cloud providers & public sector entities experts in long-term data preservation, establishing an agile R&D process to co-develop new services, fostering a model that provides structure and flexibility to onboard the resulting services validated at scale to satisfy challenging research use cases.

There are a number of advantages for the SMEs selected for the ARCHIVER Pre-Commercial Procurement Tender, that includes performing R&D of archiving and preservation services aligned with the relevant standards and European legislation (e.g. GDPR and Free Flow of Data) as well as gaining access to a well-defined, multi-disciplinary research sector base, promoting a hybrid cloud model so that a continuum of services are available to European researchers.